Could SMS Have Prevented March 27th Disaster?

By OffRoadPilots

If the safety management system (SMS) of today could have prevented the March 27, 1977, worst aviation accident in history when two B-747 at the Los Rodeos Airport on Santa Cruz de Tenerife is a question without answers. There are no answers since SMS is forward-looking and accidents cannot be predicted until the last few seconds when it is evitable that an accident will occur. At the time of the accident, it was assumed that aviation was operating with safe and fail-free systems, except for pilot errors as the bad apples in the box. Pilot error had become industry acceptable root cause to any accidents. It is unknown when pilot error became the popular root cause solutions, but accident reports since the late 60’s and early 70’s support this as a solution. However, after the June 30, 1956, Grand Canyon disaster, the probable cause of the mid-air collision was not allocated to pilot error, but that the pilots did not see each other in time to avoid the collision due to other multiple factors. Human factors are not the same as human error. Human errors or other negatives are not useful for intervention to improve safety but are symptoms of much deeper cause within systems.

Before we answer the question if an SMS could have saved both aircraft, let’s look at what SMS is.SMS is a system that introduces an evolutionary and is a structured process that obligates organizations to manage safety with the same level of priority that other core business processes are managed. SMS is a structured means of safety risk management decision making, it is a means of demonstrating safety management capability before system failures occur, it is increased confidence in risk controls though structured safety assurance processes, it is an effective interface for knowledge sharing internally and between external organizations, and it is a safety promotion framework to support a sound safety culture an promote business strategies. An effective safety management system is a support system to the business itself just as serval other systems are required to conform to regulatory compliance, to recognize competitors, to maintain business relations and to evaluate processes for effectiveness to meet defined goals.

The four factors of the 1977 disaster that stands out in the accident report are human factors, such as communication and observations, it is organizational factors, such as authority and decision-making, it is supervision factors, such as air traffic services, lights and signage, and environmental factors, such as weather and airport design. These factors combined played their roles in designing, planning and execution of the disaster. At the time when the decision was made to divert all aircraft to Los Rodeos the accident process was put in place.

The first aircraft to taxi was backtracking runway 12 for departure runway 30 and instructed to exit the runway at the 1st taxiway to hold at runway 30 but was later cleared to taxi to button runway 30 for takeoff. The second aircraft was also cleared to backtrack runway 12 but to clear the runway at the 3rd taxiway. After they were lined up on runway 30 the first aircraft received their departure clearance. The second aircraft was still backtracking runway 12 looking for the third taxiway exit when the first aircraft departed runway 30 and an accident was evadible to occur. At the time of accident runway visibility varied between 300 meters to 1500 meters (1000 ft – 5000 ft).

The first task to operate with an SMS is to appoint an accountable executive (AE) to be responsible for operations or activities authorized under the certificate and accountable on behalf of the certificate holder for meeting the requirements of the regulations. An SMS policy includes safety objectives, commitment to fulfill safety objectives, a safety reporting policy of safety hazards or issues, and defines unacceptable behavior. A safety policy must also be documented and communicated throughout the organization. What the safety policy does is to establish the base and foundation to build an SMS, and to plant the seed that safety is paramount. Human factors, organizational factors, supervision factors and environmental factors must be linked to the safety policy to instill process awareness and accountability in all personnel. With a mature SMS it is expected that personnel have learned to consider special operations, such as the combination of hazards with overcrowded airports, low visibility, and more aircraft on the movement area than the airport was designed to support. An SMS in 1977 would include tools to affecting the outcome since the accountable executive appointed by their name takes pride in their roles to be responsible for safety. In addition, and between the airport operator, ATS and the two airlines, there was a tool available to recognize that their SMS includes processes to recognize that a combination of an overcrowded ramp and low visibility is special operations and therefore normal operations processes are invalidated.

A regulatory requirement of an SMS is to adapt to is the size, nature and complexity of the operations, activities, hazards and risks associated with the operations of the certificate holder. Since there were an abnormal level of heavy aircraft and abnormal number of aircraft that day, an SMS applicable to normal operations would be scaled to much smaller operations. If an SMS had been in place on that day, the airport operator or ATS would have had a tool to recognize that their SMS was not designed, or capable of managing the increased traffic volume. Human factors would be affected by communication and observations, organizational factors by authority and decision-making processes, supervision factors by ATS overload compared to normal operations, and environmental factors by weather and airport design. An SMS designed to size, and complexity is essential in a decision making process to establish a limit when the system becomes overloaded. Just as an electric cable is designed for a limited voltage, an SMS is designed for a limited load factor.

A quality assurance program is a requirement to be included in an SMS. A prerequisite to maintain a quality assurance program is operational quality control. If a quality assurance program had been in place at that time, it would have included a daily quality control system where processes are linked to regulatory requirement and safety expectations. In a business transaction cash is counted daily and the same principle applies to a safety management system. Process compliance with regulatory requirement, safety policy and process outcome must be accounted for daily to recognize drift, limitations and volume. An SMS that day would have included tools to capture the fact that runway capacity was overloaded that day.

A safety management system is required to assign duties on the movement area and any other area set aside for the safe operation of aircraft, including obstacle limitation surfaces, at the airport, only to personnel who have successfully completed a safety-related initial training course on human and organizational factors. Airside personnel that day would have been equipped with SMS tools to recognize the overload on human and organizational factors with the increased volume and aircraft size.

An SMS is required to include a policy for the internal reporting of hazards, incidents and accidents, including the conditions under which immunity from disciplinary action will be granted. If an SMS had been in place that day, the flight crew of any aircraft, not just the two involved in the accident, would have been equipped with a tool to recognize hazards and filed hazard reports by telephone or fax. A report is an SMS tool to trigger a reaction to an overloaded airport operations that day.

The accountable executive is the person accountable on behalf of the certificate holder to meet the requirements of the regulations, and compliance with their SMS policy. This position is not a position for the person to be held accountable, or responsible for past incidents, but for the person to maintain oversight and communicate with workers and the regulator on issues and compliances. An SMS is required to include procedures for making progress reports to the accountable executive at intervals determined by the accountable executive and other reports as needed in urgent cases. A report to the AE of low visibility operations, volume and aircraft size is an SMS tool to trigger urgent issues and when reported immediately it is an SMS tool for the AE for action and communication with their flight crew and airport operator.

A quality assurance program is required to be included in the SMS is a function of the SMS to establish policies, processes, and procedures. These processes and procedures are then applied in an operational quality assurance program to perform specific required task. One of the tasks is to perform regular audits. For airports, audits are preformed by checklists of all activities controlled by the airport operations manual. An SMS on March 27th would have included a tool to recognize the excess volume and workload and a trigger for the airport operator to review their activities controlled by the airport operations manual.

The person managing the SMS, which could be the position of an SMS Manager, Safety Officer, or Director of Safety, is required to determine the adequacy of the training required in their safety management system. This training includes indoctrination training, initial training, upgrade

training and annual refresher training. Flight crew or airside personnel received this training would have a tool to recognize hazardous condition and reported it via their SMS process.

The person managing the SMS is also required to monitor the concerns of the civil aviation industry in respect of safety and their perceived effect on the certificate holder, or SMS enterprise. Dispatch for any of the airlines were monitoring diversions and weather conditions with their tools available at that time, and their SMS training would have triggered a report of this abnormal condition to their SMS system, and someone would be required to make a decision if any actions were required, and if these hazards combined were incompatible with the safe operation of an airport or aircraft.

Within an SMS the hazards of March 27th need to be analyzed without knowing, or considering the outcome, but be analyzed as an event in the future based on information available at that time. SMS is unable to establish if an incident will occur or not in the future, and it is therefore impossible to determine if an SMS would have prevented the March 27th accident. On January 13th, 2022 there was a similar incident at JFK airport, except there were no low clouds or fog. The tower could see an aircraft crossing directly in front of a departing aircraft and their takeoff was aborted. On this day, both airlines involved were operating with an SMS, but an SMS did not prevent the incident, and an SMS by itself could not have prevented the Los Rodeos accident without applying tools in the SMS toolbox.

However, on March 27th an SMS would have made available several triggers for flight crews, ATS and the airport operator to pause operations and assess their next step and special cause variations that existed that day. A pause would at a minimum have generated a decision-making process for either the airlines, ATS or the airport operator.

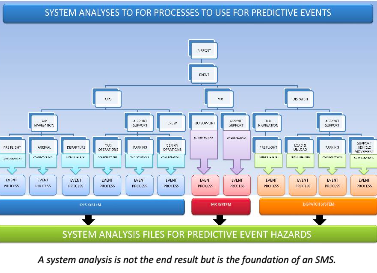

An SMS exposes the “holes in the Swiss cheese”. When the cheese is sliced it exposes the holes within the cheese which comes available to assessed within the context of a system analyses and within observed special operating conditions. Without an SMS there were no triggers, or a person assigned to slice the Swiss cheese on that day, and that is what was missing.

OffRoadPilots