Santa’s SMS Did It Again

Catalina9

Last year Santa moved the production line south to an undisclosed location. The North Pole had become too remote with the anticipated supply chain shortage. When Santa first moved to the North Pole, the waterways were free of ice and ships could move material from anywhere on the globe into Santa’s harbor for another production year. Before moving to the North Pole, Santa had the production site on an undisclosed location with easy access to and from all seven seas. As the Norsemen begun to intrude on the production line and plundering toys and presents, Santa realized that it was time to move to a different place, and the North Pole was chosen. It wasn’t very far for Santa to move since the manufacturing place was nicely hidden in an ocean bay. Travel to the North Pole was just a short journey travel between a few islands and beautiful green landscape to reach the ice landscape at the Pole. Moving south again last season was therefore not new for Santa, Mrs. Santa, or the Elves.

|

| Santa practicing at an undisclosed location |

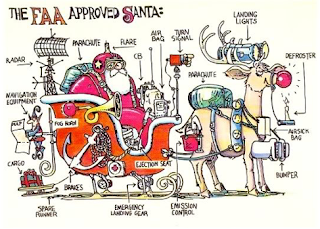

Santa had a huge task this season to get all reindeers trained and in shape for travel. After several months of quarantined in a congested area, they had lost much of their strength and time for training was running out. The first thing on Santa’s list was to upgrade their hooves to the current version of rooftop autolanding system. The latest is an autoland Category 4RVR or four reindeer rooftop simultaneously and includes a chimney glide slope, where the chimney itself geo-located for GPS, or Get-Presents-Soon approaches to be used anywhere. Prior to GPS approaches 4RVR and visibility zero-zero, Santa had to do several approaches to make it in to one single home. Sometimes the reindeers missed the rooftop and slid off the gable end. In areas with little or no snow, Santa was hurt and unable to crawl down the chimney and had to leave the presents outside.

|

Santa’s backup plan if reindeers were permanently grounded. |

By the end of summerseason Santa had installed CAT 4RVR equipment on all four hooves of all the reindeers. When all reindeers had CAT 4RVR installed, the programmed glideslope was determined for each hoof and locked in on approach for autoland. The next step was to train the reindeers to use this new system. One hazard identified was that Rudolph and the other reindeers tried to locate a rooftop visually which caused spatial disorientation, the inability of a reindeer to determine the true body position, motion, and altitude relative to the earth or the surroundings. Since all initial training was required by TC to be done by the VFR regulations, or Very Frequent Runs regulations, it became a difficult task for Santa to simulate zero-zero visibility. Mrs. Clause had already completed a Change Management case, or Safety Case of how to administer the training applying the VFR regulations. It was not a simple task, but by building a 3-D virtual town in the sky, they could place eye-covers on all the reindeers and do their CAT 4RVR approach training. The beauty of this 3-D virtual town was that after the reindeers came to a stop, they would not fall out of the sky but remain on the rooftop for zero-zero departure training.

|

After the quarantine all systems were treated as new and fist approval. |

Once again Santa was ready to deliver presents. If it had not been for Santa’s SMS, or Streamlined Mission Service system, they could not have managed the enormous task to ensure safety in their operations after being quarantined for several months, several months with no operational training and several months without starting up or using their systems required to make 100% deliveries to 100% of the homes 100% successful 100% of the times.

Catalina9